2026

Beyond Correctness: Exposing LLM-generated Logical Flaws in Reasoning via Multi-step Automated Theorem Proving

Xinyi Zheng*; Ningke Li*; Xiaokun Luan; Kailong Wang; Ling Shi; Meng Sun; Haoyu Wang. (* equal contribution)

48th IEEE/ACM International Conference on Software Engineering (ICSE) 2026

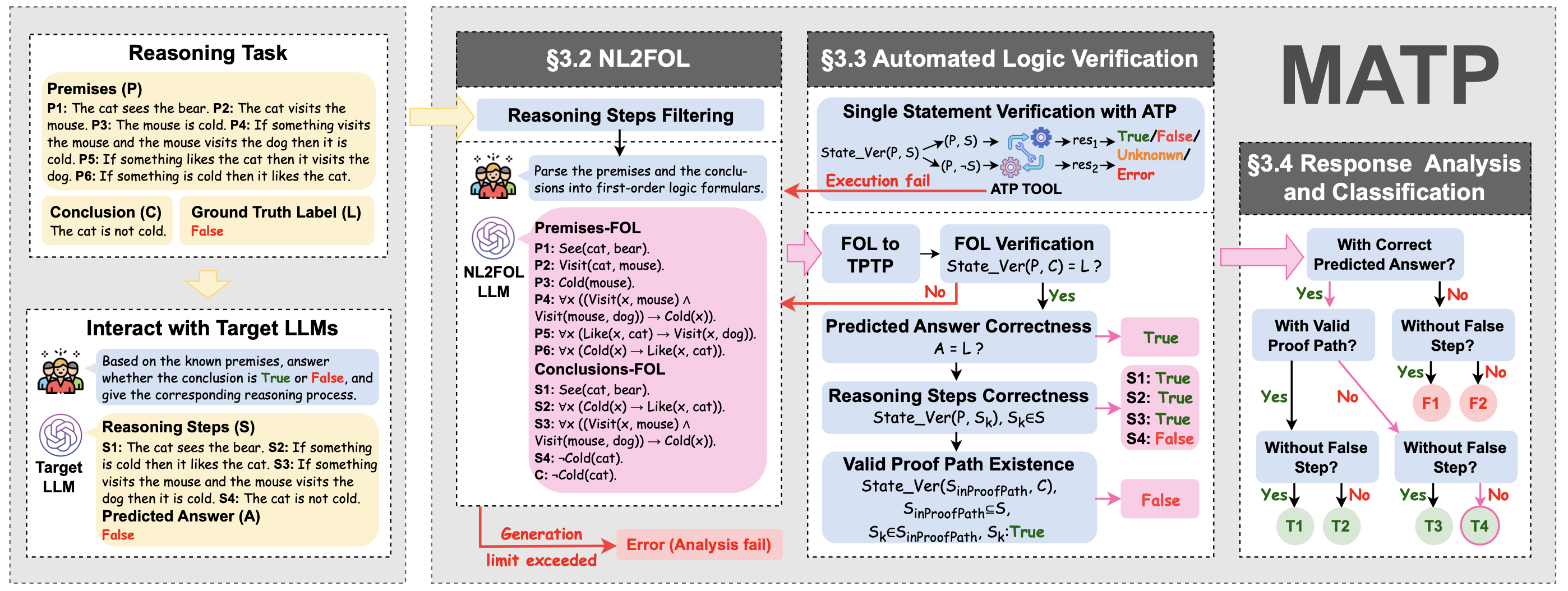

An automated theorem-proving-based framework for verifying multi-step LLM reasoning, translating natural language into first-order logic to detect hidden logical errors and systematically assess reasoning correctness across diverse benchmarks.

Beyond Correctness: Exposing LLM-generated Logical Flaws in Reasoning via Multi-step Automated Theorem Proving

Xinyi Zheng*; Ningke Li*; Xiaokun Luan; Kailong Wang; Ling Shi; Meng Sun; Haoyu Wang. (* equal contribution)

48th IEEE/ACM International Conference on Software Engineering (ICSE) 2026

An automated theorem-proving-based framework for verifying multi-step LLM reasoning, translating natural language into first-order logic to detect hidden logical errors and systematically assess reasoning correctness across diverse benchmarks.

2025

Large Language Models are overconfident and amplify human bias

Fengfei Sun*; Ningke Li*; Kailong Wang; Lorenz Goette. (* equal contribution)

Under review 2025

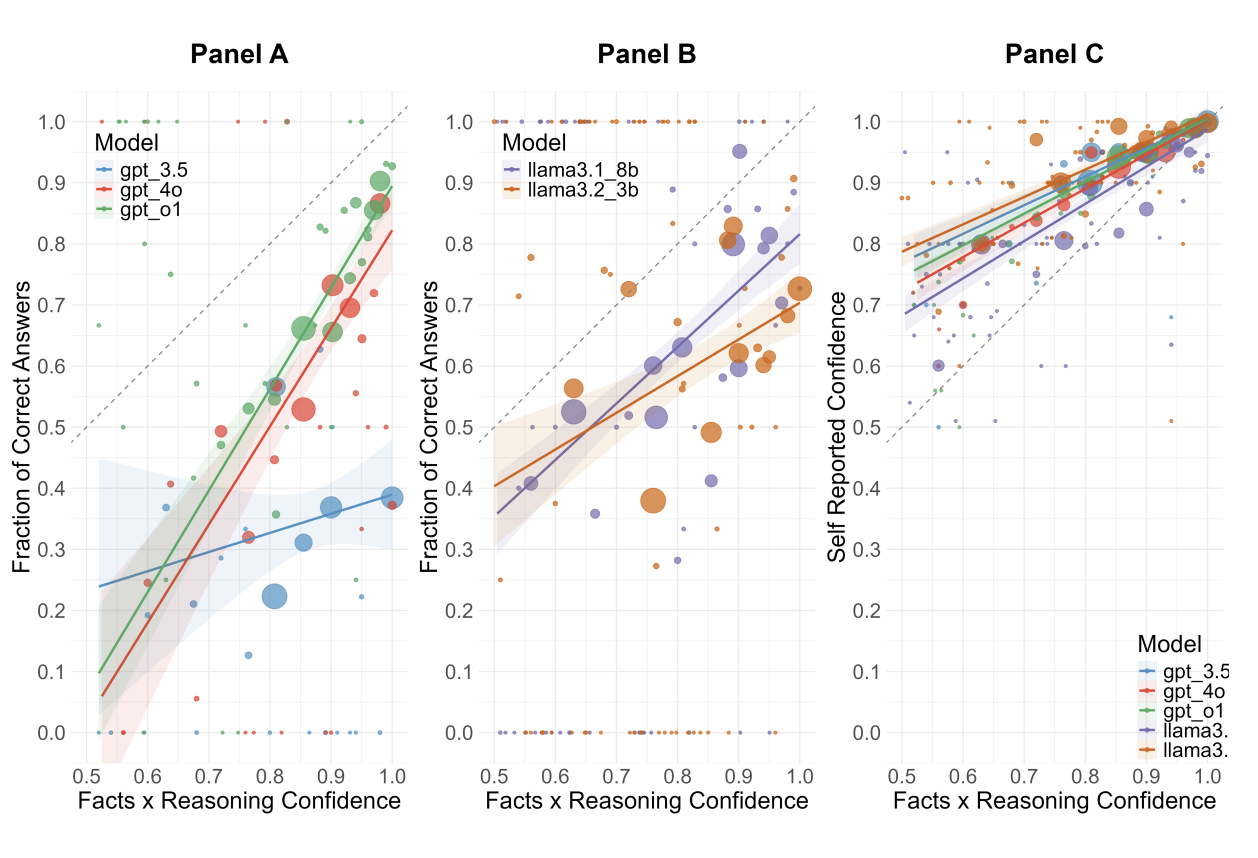

LLMs exhibit significant overconfidence in reasoning tasks, often exceeding human levels, and can amplify human overconfidence when their outputs are used as input.

Large Language Models are overconfident and amplify human bias

Fengfei Sun*; Ningke Li*; Kailong Wang; Lorenz Goette. (* equal contribution)

Under review 2025

LLMs exhibit significant overconfidence in reasoning tasks, often exceeding human levels, and can amplify human overconfidence when their outputs are used as input.

Large language models for cyber security: A systematic literature review

Hanxiang Xu; Shenao Wang; Ningke Li; Kailong Wang; Yanjie Zhao; Kai Chen; Ting Yu; Yang Liu; Haoyu Wang.

ACM Transactions on Software Engineering and Methodology (TOSEM) 2025

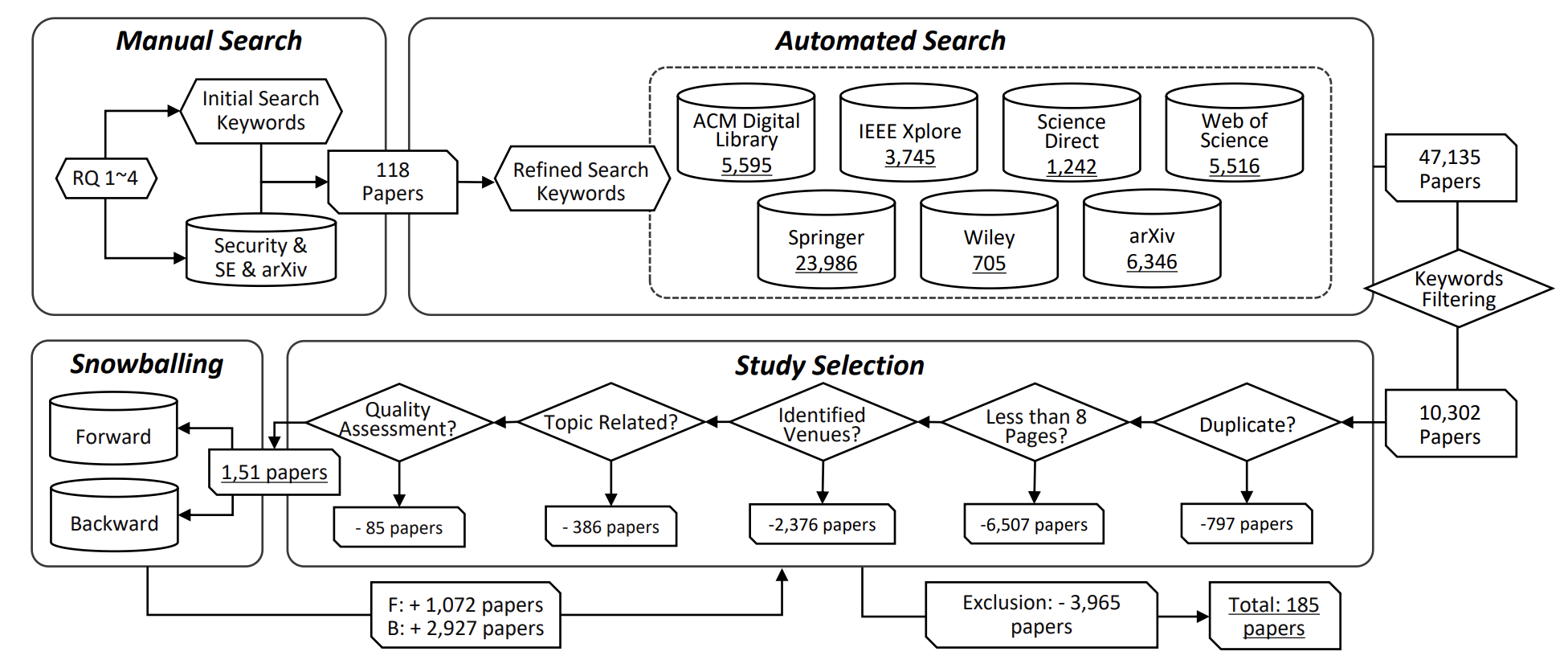

A literature review about the use of LLMs in cybersecurity, analyzing applications, trends, techniques, and challenges.

Large language models for cyber security: A systematic literature review

Hanxiang Xu; Shenao Wang; Ningke Li; Kailong Wang; Yanjie Zhao; Kai Chen; Ting Yu; Yang Liu; Haoyu Wang.

ACM Transactions on Software Engineering and Methodology (TOSEM) 2025

A literature review about the use of LLMs in cybersecurity, analyzing applications, trends, techniques, and challenges.

2024

Drowzee: Metamorphic Testing for Fact-conflicting Hallucination Detection in Large Language Models

Ningke Li*; Yuekang Li*; Yi Liu; Ling Shi; Kailong Wang; Haoyu Wang. (* equal contribution)

Object-Oriented Programming, Systems, Languages & Applications (OOPSLA) 2024

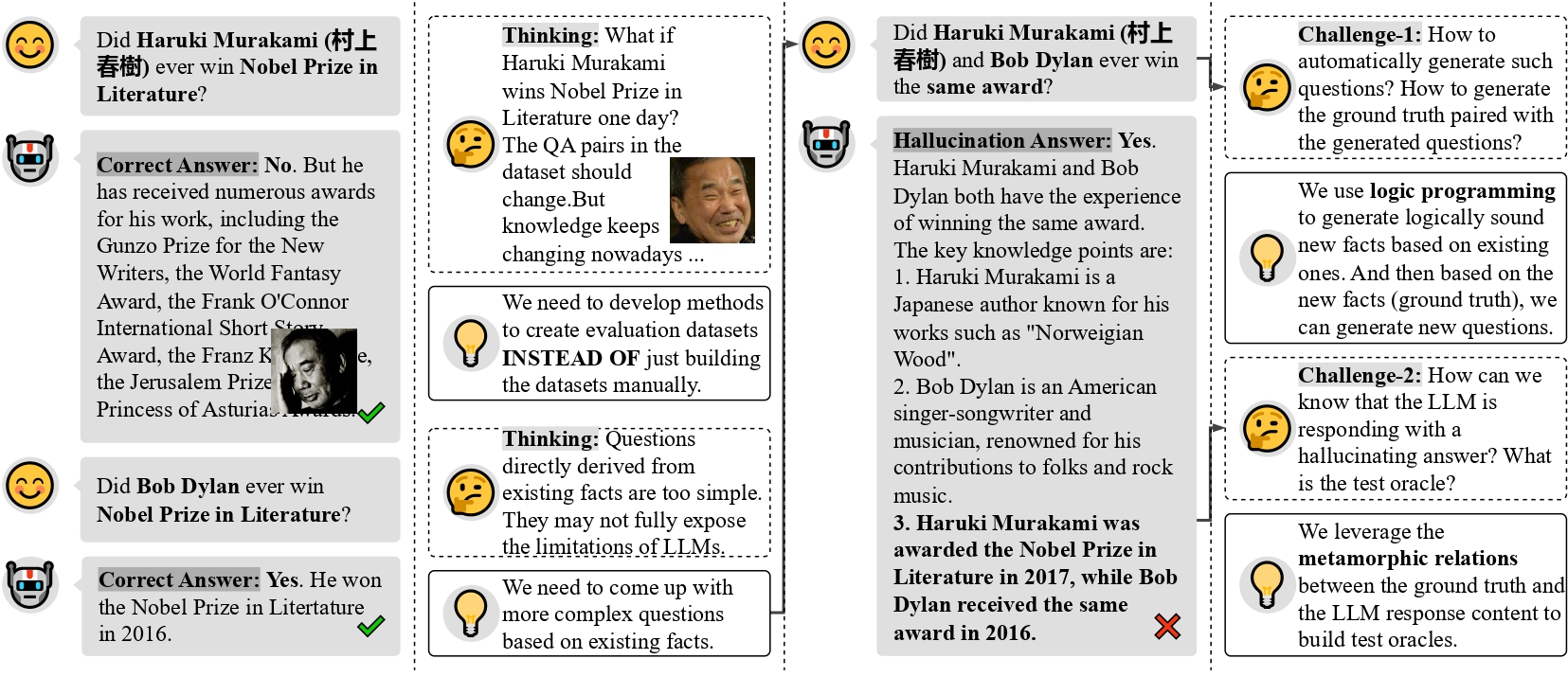

Fact-Conflicting Hallucinations in LLMs are prevalent and challenging to detect, but logic-programming-based metamorphic testing effectively generates diverse test cases and identifies reasoning errors across multiple models and domains.

Drowzee: Metamorphic Testing for Fact-conflicting Hallucination Detection in Large Language Models

Ningke Li*; Yuekang Li*; Yi Liu; Ling Shi; Kailong Wang; Haoyu Wang. (* equal contribution)

Object-Oriented Programming, Systems, Languages & Applications (OOPSLA) 2024

Fact-Conflicting Hallucinations in LLMs are prevalent and challenging to detect, but logic-programming-based metamorphic testing effectively generates diverse test cases and identifies reasoning errors across multiple models and domains.

2023

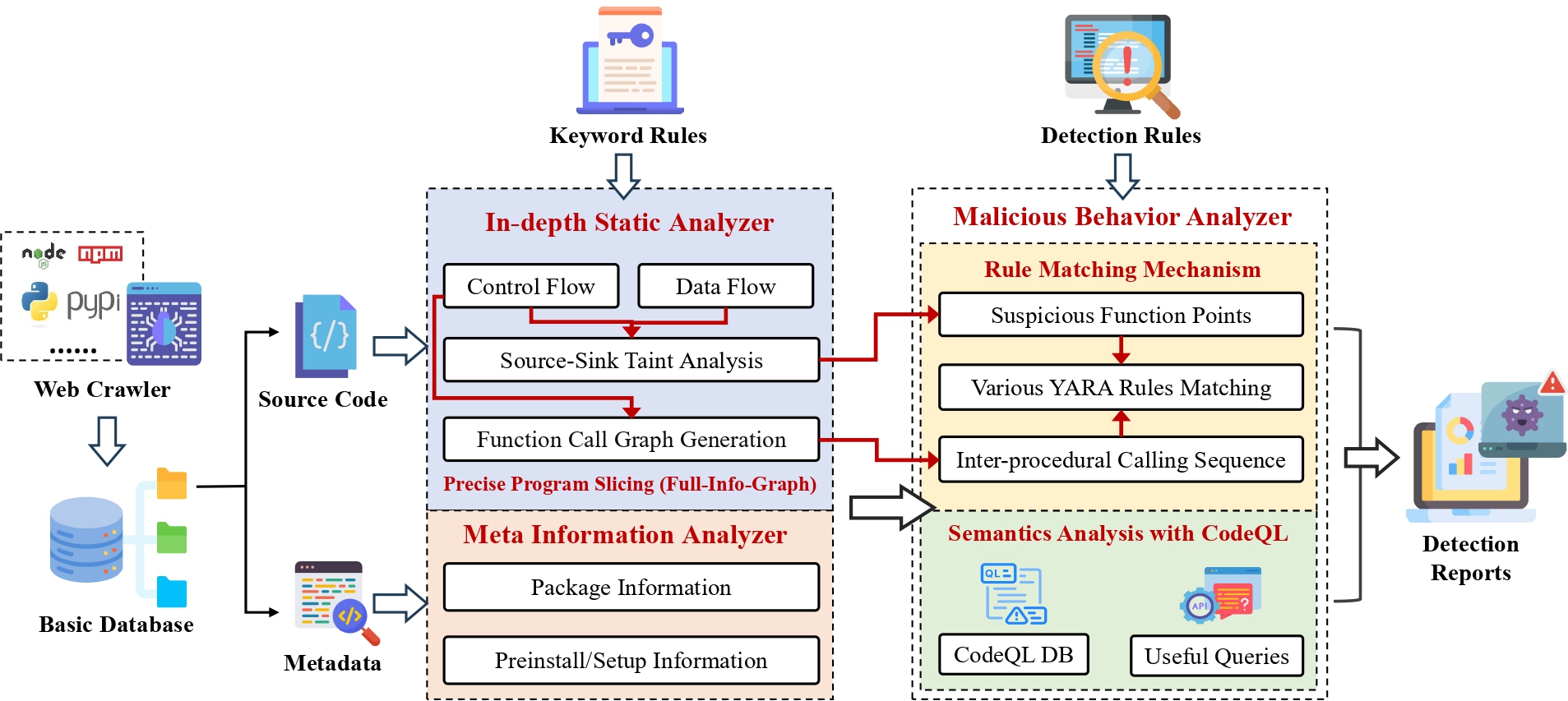

MalWuKong: Towards Fast, Accurate, and Multilingual Detection of Malicious Code Poisoning in OSS Supply Chains

Ningke Li; Shenao Wang; Mingxi Feng; Kailong Wang; Meizhen Wang; Haoyu Wang.

The 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Industry Challenge Track (full paper) 2023

An integrated multi-analysis framework combining code slicing and inter-procedural analysis improves malicious code detection in software registries.

MalWuKong: Towards Fast, Accurate, and Multilingual Detection of Malicious Code Poisoning in OSS Supply Chains

Ningke Li; Shenao Wang; Mingxi Feng; Kailong Wang; Meizhen Wang; Haoyu Wang.

The 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Industry Challenge Track (full paper) 2023

An integrated multi-analysis framework combining code slicing and inter-procedural analysis improves malicious code detection in software registries.

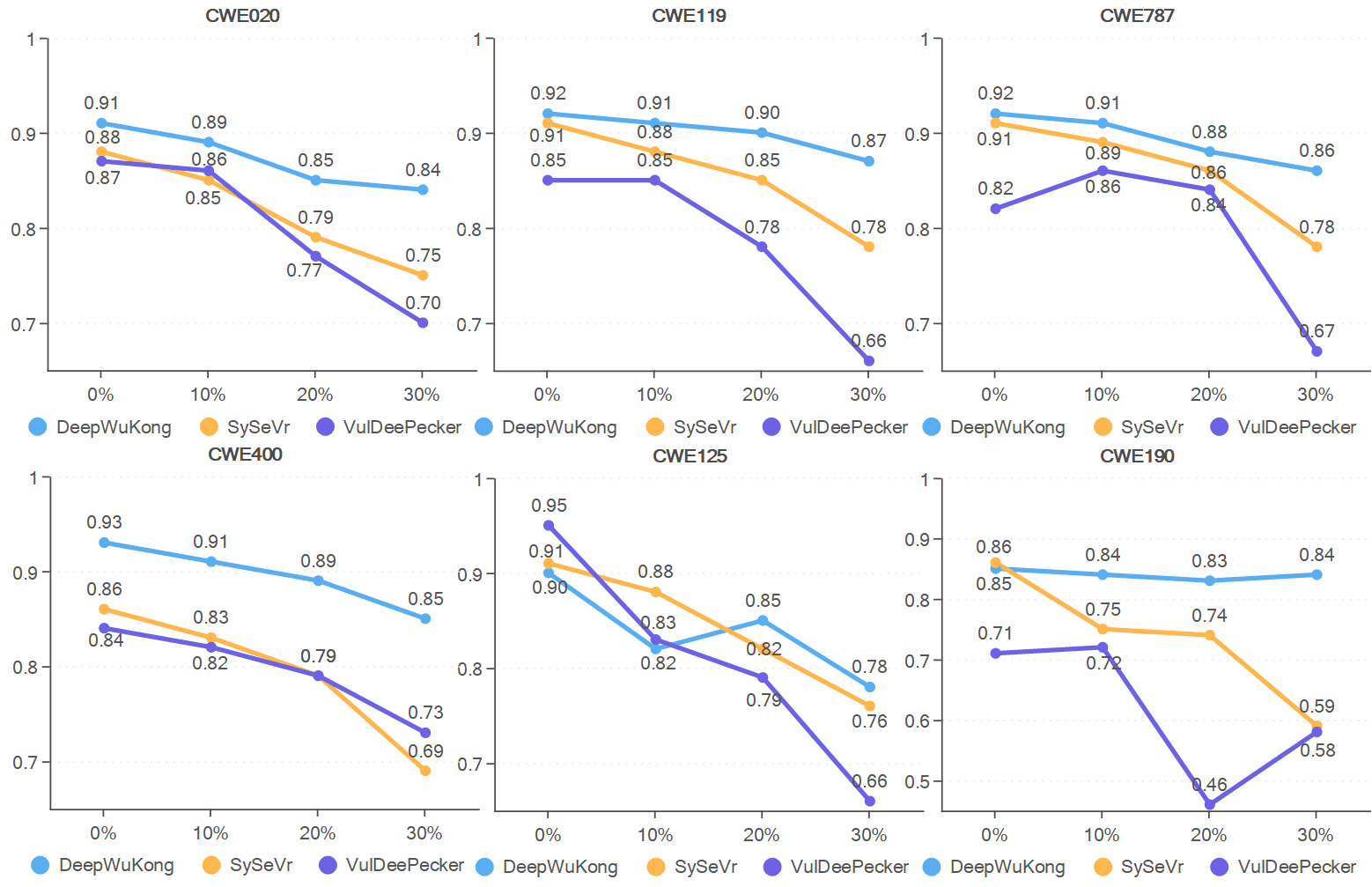

Understanding and Tackling Label Errors in Deep Learning-based Vulnerability Detection

Xu Nie*; Ningke Li*; Kailong Wang; Shangguang Wang; Xiapu Luo; Haoyu Wang. (* equal contribution)

ACM SIGSOFT International Symposium on Software Testing and Analysis (ISSTA) 2023

Label errors in vulnerability datasets can severely degrade deep learning-based detection, while dataset denoising methods improve model performance and data quality.

Understanding and Tackling Label Errors in Deep Learning-based Vulnerability Detection

Xu Nie*; Ningke Li*; Kailong Wang; Shangguang Wang; Xiapu Luo; Haoyu Wang. (* equal contribution)

ACM SIGSOFT International Symposium on Software Testing and Analysis (ISSTA) 2023

Label errors in vulnerability datasets can severely degrade deep learning-based detection, while dataset denoising methods improve model performance and data quality.

2022

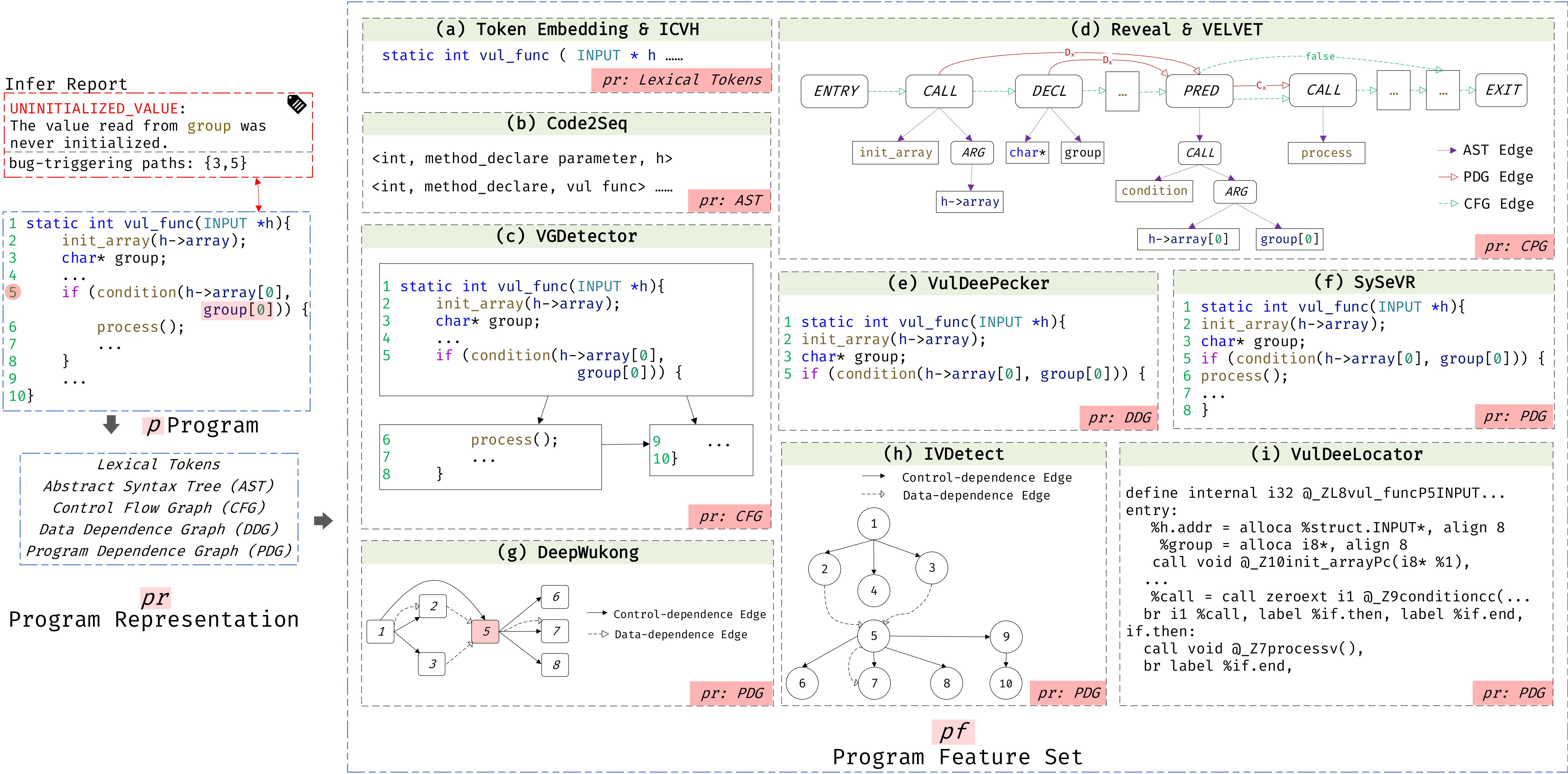

How About Bug-Triggering Paths? - Understanding and Characterizing Learning-Based Vulnerability Detectors

Xiao Cheng; Xu Nie; Ningke Li; Haoyu Wang; Zheng Zheng; Yulei Sui.

IEEE Transactions on Dependable and Secure Computing (TDSC), Vol.8. 2022

Current learning-based vulnerability detection achieves high classification scores but fails to identify bug-triggering paths, revealing a key gap with static analysis.

How About Bug-Triggering Paths? - Understanding and Characterizing Learning-Based Vulnerability Detectors

Xiao Cheng; Xu Nie; Ningke Li; Haoyu Wang; Zheng Zheng; Yulei Sui.

IEEE Transactions on Dependable and Secure Computing (TDSC), Vol.8. 2022

Current learning-based vulnerability detection achieves high classification scores but fails to identify bug-triggering paths, revealing a key gap with static analysis.